A HARD NUT TO CRACK

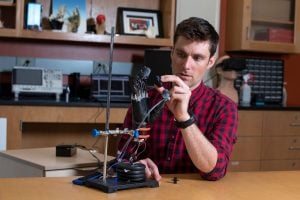

Osborn arrived in Thakor’s lab in the fall of 2012. As an undergraduate at the University of Arkansas, Osborn had primarily been interested in pure robotics. But by the time he started graduate school, he wanted to do work that had medical applications. Thakor’s lab seemed like the perfect fit. For more than 25 years, Thakor has worked on developing better methods for controlling prosthetic limbs, both at Johns Hopkins and at a companion lab in Singapore.

Osborn quickly turned his attention to the problem of sensation. There have been many improvements in control systems for prosthetic devices during the last decade, but few attempts have been made to allow amputees to feel touch signals from their prosthetic limbs.

“Touch is really interesting,” says Paul Marasco, a sensory neurophysiologist who works on bionic prosthetic devices at Cleveland Clinic. (He was not involved in Osborn’s project.) “The individual touch sensors in the skin don’t really provide you with a cohesive perception of touch. The brain has to take all that information and put it all together and make sense of it. The brain says, ‘Well, OK, if I have a little bit of this and a little bit of that, and there’s a little bit of this sprinkled in, then that probably means that this object is slippery. Or this object is made out of metal, or it feels soft.’ None of those perceptions map simply onto a single type of sensory neuron. All of them require the brain to integrate data from several different types of neurons. That’s one reason why sensation has been such a hard nut to crack and why there are so few labs doing it.”

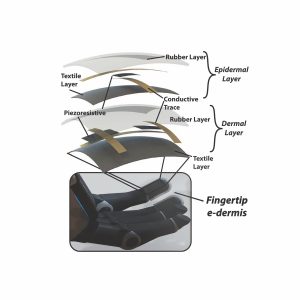

Osborn was determined to try. He began his project in 2013 by looking for sensor materials that would be flexible enough to fit smoothly over the fingertips of a prosthesis but tough enough to withstand repeated contact with diverse objects. After several rounds of trial and error, he developed a rubber fabric that encases two layers of small silicone-based piezoresistive sensors. The team calls this fabric the “e-dermis.”

The two layers of the e-dermis mimic the two primary layers of human skin: the epidermis and the dermis. On the outer, “epidermal” layer, Osborn’s team designed certain sensors to behave like human nociceptors, which detect noxious, painful stimuli. In the deeper, “dermal” layer, the sensors mirror four types of neurons known as mechanoreceptors, which variously detect light touch and sustained pressure.

“It’s a pattern that’s biomimetic—a sensor array that matches what our nerve endings are used to,” Thakor says. “Luke’s team made a meticulous effort here to get the patterns right.”

As he developed the fingertip sensors, Osborn initially performed benchtop experiments using a prosthetic hand that was not attached to a human user. In these purely robotic trials, he developed two reflex responses that mimic human spinal reflexes. First, he trained the hand to tighten its grip if the fingertip sensors told it that an object was slipping. Second, he trained the hand to automatically drop a painful object.

The challenge here was speed: Human spinal reflexes operate within 100 to 200 milliseconds—think of how fast you react to a hot stove—and Osborn’s team wanted to match that rate. To accomplish that, the prosthetic hand had to correctly determine within just 70 milliseconds that it was grasping something painful.

“We were able to achieve that quick decision by looking at a few key features of the pressure signal from the e-dermis,” Osborn says. “These features include information such as where the pressure is located, how large the pressure is, and how quickly the pressure changes.”

AN HOUR HERE, AN HOUR THERE